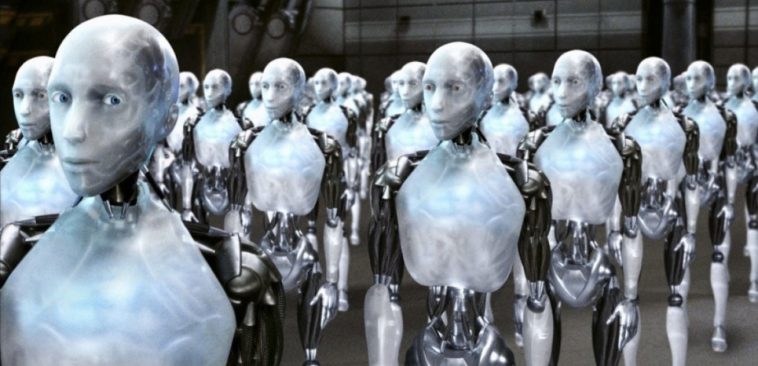

Remember Will Smith’s old box office hit “iRobot” where the Robot was forced to shut down because it developed intelligence and emotions? Something similar and scary just happened at Facebook.

Our lives have been changed by AI and the technology is being used in a wide area of services. Even the most popular social media platform Facebook developed an artificial intelligence system that created its own language. In order to make communication much more efficient, a system of code words was developed. But that system has been shut down by the researchers because of the fear of losing control of the AI.

At Facebook, the observations were made as the AI was monitored by humans as the divergence from its training in English for developing its own language. To humans, the outcome was ridiculous but contained semantic meaning when explored by AI agents.

Negotiating the new language:

It was noticed that AI had given up on English as the advanced system has the ability to negotiate with the other AI agents. By using phrases the agent started communicating that were seen as unexplained initially but actually represented the meaning.

English lacks a reward:

The researchers observed that the rich expression of English phrases was not required by the AI as the advanced systems work on the reward principle. That is where they expect to follow a sudden course of action for getting a benefit.

According to researchers, the agents will drift off from an understandable language by inventing code words for themselves. A similar use of shorthand has been observed by AI developers for simplifying communication.

AI language translates human ones:

Recently the addition of a neural network has made it possible for Google to improve the translation service. The system now has the ability to translate much more efficiently than before. Google’s team was surprised by knowing that the AI had silently written its own language that was made especially for translating sentences.

There is not enough evidence to prove that AI-invented languages can actually be a threat that could enable machines to overrule their operators.